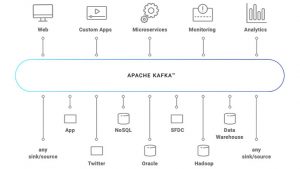

Being based in Germany, Waehner deals with a lot of manufacturing companies who have very specific IoT challenges. This is an area where Kafka is beginning to achieve significant traction. One of the reasons for that is the way that Kafka handles IoT data. Many IoT analytics solutions struggle to manage the volume of data and customers end up with large database full of data they never use. Waehner explains why Kafka is a better bet for streaming the data to the analytics solution.

One of the latest talking points around analytics is edge processing. Companies are focused on separating signal from noise using any device they can. Waehner points out that before people start they need to think about what the edge really is. He makes the point that while it could be a car, for real-time analytics it is better to think of smaller data centres or clusters. In a hospital, for example, you might deploy a single Kafka cluster but this could then feed aggregated health data at a regional level. Another solution is to build models in the data centre and then deploy them much further out.

To hear more of what Waehner had to say listen to the podcast

Where can I get it?

obtain it, for Android devices from play.google.com/music/podcasts

use the Enterprise Times page on Stitcher

use the Enterprise Times page on Podchaser

listen to the Enterprise Times channel on Soundcloud

listen to the podcast (below) or download the podcast to your local device and then listen there