Jordan Wright and Olabode Anise of Duo Security have spent three months analysing 88 million public Twitter accounts and over half-a-billion tweets. They presented their analysis of the problem at Black Hat in Las Vegas last week. Their paper, Don’t @ Me is now available from the Duo Security website.

According to Wright: “Malicious bot detection and prevention is a cat-and-mouse game. We anticipate that enlisting the help of the research community will enable discovery of new and improving techniques for tracking bots. However, this is a more complex problem than many realize, and as our paper shows, there is still work to be done.”

What did the research uncover?

The main focus of the research was how to improve the detection of Twitter bots. To do this Wright and Anise had to build their own dataset against which to run tools. They have provided a link to the tools and scripts that they used on GitHub along with instructions on how to use.

As part of the research they discovered:

- A sophisticated cryptocurrency scam botnet consisting of at least 15,000 bots. It was actively siphoning money from its victims by using multiple linked attacks.

- Botnets that were deploying deceptive behaviour to appear genuine. This means not tweeting in bursts, being online for longer periods and using random retweets.

- The use of geolocation in tweets in order to appear as if they were disconnected.

- How botnets create their own fake social networks with controlled interaction to prevent them being easily identified if one part is taken down.

- How tracking fake followers can uncover other fake followers and their networks. Fake followers can also persuade legitimate users to follow them extending their impact.

- The researchers also built their own anatomy of a fake account that looked at its account attributes, content and the content metadata.

- The use of amplification bots that like tweets to increase a tweets popularity. This increases the likelihood of other users clicking on links on the tweets. Those links often point to sites where malware is downloaded onto the users device.

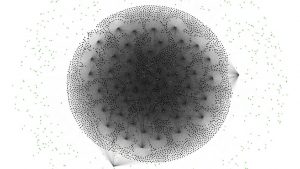

- The discovery of a scam hub consisting of a 3-tier hierarchical structure.

- Mapping of the cryptocurrency scam botnet’s three-tiered, hierarchical structure, consisting of scam publishing bots, “hub” accounts followed by bots and amplification bots.

- Using unicode characters in tweets instead of traditional ASCII characters.

- Adding various white spaces between words or punctuation.

- Transitioning to spoofing celebrities and high-profile Twitter accounts in addition to cryptocurrency accounts.

- Using screen names that are typos of a spoofed account’s screen name.

- Performing minor editing on the profile picture to avoid image detection.

The latter five behaviours were seen as botnets began to evolve to avoid detection

The cryptocurrency scam botnet

In late May 2018 the researchers uncovered a cryptocurrency scam. It used spoofed accounts to giveaway cryptocurrency.

The scam uncovered by Duo Security is similar. A spoofed account was created for a legitimate cryptocurrency account. It used the same profile name and details of that account but a slightly different name. The bot then replied to a real tweet by the legitimate account. That tweet would contain a link to a cryptocurrency giveaway.

Those following the links would get infected with malware and redirected to sites that would harvest their data. This is done by asking them to create an account on the site or for the details of a crypto wallet. The attackers would then use that data to defraud the victim.

Wright and Anise discovered more than 2,600 bots spreading similar links. The bots were connected at two separate networks. Enumerating them uncovered a larger botnet as part of the scam.

This type of attack over social media is not new. Back in December 2017, Oprah Winfrey warned her followers of a social media scam running on Instagram. It asked people to sign up for an OWN account on Instagram. It said by signing up and providing bank details, Winfrey would give some lucky winners a Christmas surprise.

In July, US TV and film star Tyler Perry warned of a similar attack taking place on Facebook. In a video post he said: “It’s Tyler Perry. I’m not giving away anything on Facebook. I am not giving away any money. My team has to shut down these things every day.”

Lessons for social media marketing teams

There are some significant points of interest here for social media marketing teams who want to avoid being seen as bots.

- Too few or too many likes can look suspicious

- Not every tweet has to contain a link

- Do not use unicode characters in tweets, stick to ASCII

- Edit carefully to avoid random extra spaces in a tweet

- Don’t have a single time of day when you send out all your tweets. Spread them over time to get better coverage in different regions and to show the account is monitored

- Respond to tweets and retweets

Some of the changes that Twitter has already made is hitting social marketing teams. A company with multiple legitimate Twitter accounts cannot have identical tweets sent out across its accounts. Instead it needs to send from one account and then retweet from the others. This is forcing social media teams into behaviour that starts to look remarkably like the three-tiered structure above.

There is a need for Twitter to do a better job of engaging with these social marketing teams inside both large and small organisations. If it doesn’t, then the workarounds that are available on the Internet will make some of them look like mini scam bots.

What does this mean

Since the last US election, the UK Brexit vote and several other national elections, that attention has ratcheted up. Facebook, LinkedIn and Twitter have all made announcements about the number of fake accounts in their networks. The companies have promised to remove those accounts. Twitter has doe the most to rectify the problem. It has removed over 70 million accounts in the last three months and continues to do so.

Removing accounts has had other impacts on both Facebook and Twitter. Both have reported a loss of legitimate accounts recently. Facebook lost 3 million accounts as a result of the Cambridge Analytica scandal. The result was a loss of nearly $120 million in a single day. The following day Twitter reported the loss of 1 million accounts in July. That led to over $6 billion being wiped off its share price.

For both companies, the financial impact is something they can survive. What they cannot survive is the risk of greater regulator impact on their businesses. As Duo Security has shown, cybercriminals are getting smarter in how they use bots. They are programmed to act like legitimate users. The risk is that in chasing the bots, legitimate users will get caught in the crossfire. This will not help the already soiled reputation of the social media giants.

The question for the social media companies is “can you really police yourselves?” Both Facebook and Twitter are trying to convince us they can handle this. The security community is not convinced.